Now that we’ve got our first SDDC up and running with a VPN connection back to our office/data center, it’s time to tear it all down again and turn it into something a little more useful. I need to define the word useful before I go on, because this isn’t going to be a topology that everyone wants or needs. It’s simply a demo of what you can do with SDDC and AWS at scale. If you have multiple SDDCs in a region or across multiple regions and need them to communicate with multiple AWS accounts (possibly managed by AWS Control Tower), then this will be relevant for you. Or if like me you’re a networking nerd and just want to see something cool, read on.

This can obviously also work on a smaller scale, but as some of the components I’ll be deploying will lead to increased AWS charges, there’s likely to be a cheaper way to accomplish your goals if you have a smaller environment.

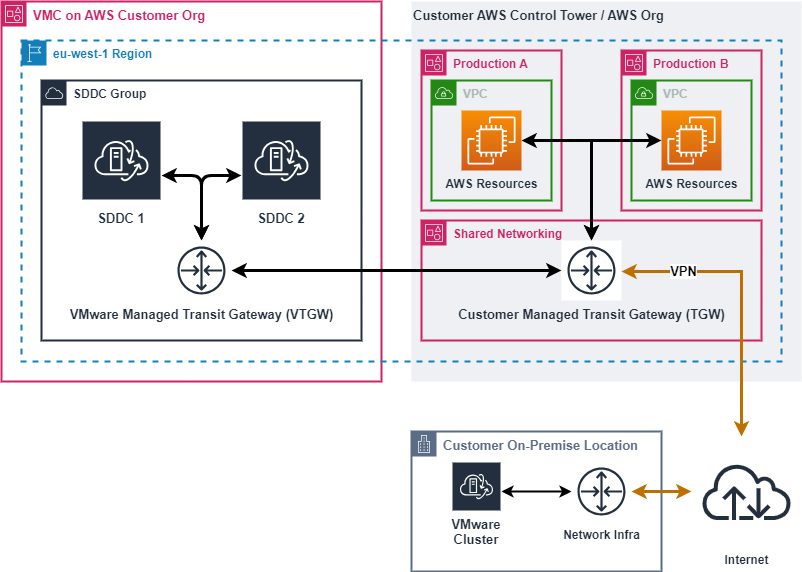

For reference as we move through this exercise, the below diagram is roughly the end state I want for my environment.

On the left side of the diagram is where our VMware Cloud components live. Right now we’ve just got a single SDDC so I’ll be creating a SDDC group and adding our SDDC to it. The functionality of an SDDC group goes well beyond what we’ll be doing here. It can connect multiple SDDCs together via high bandwidth, low latency links (what VMware calls “Transit Connect”) across up to three AWS regions. SDDC groups can only be formed with SDDCs that are within the same VMC organisation. If your intention isn’t to peer with an AWS account, you can also connect an AWS Direct Connect link to an SDDC group.

Getting back to our scenario, on the right side of the diagram is our existing AWS infrastructure. We’ve got AWS Control Tower setup and to keep things neat and tidy I’ve got a ‘Network Shared Services’ account where all the network interconnects terminate and where our transit gateway is setup. I’ve also got a couple of other accounts running various workloads. Some production, test & dev, sandbox, stuff like that. In some cases we’ve got VPCs created in our network account shared to those accounts and other accounts are free to create their own VPCs and request to attach them to the transit gateway in the networking account.

The bottom of the diagram is the easiest part. I’ve got a VMware cluster on-premises which is currently attached to the AWS transit gateway using a route based VPN connection. If I was in need of more bandwidth or lower latency than a VPN could provide, I’d look at a direct connect (or multiple direct connects for redundancy).

With all that covered, lets launch into the demo.

We start off pretty basic by creating the SDDC group, then things get a little more complicated. To establish the peering between the VMware managed TGW and our TGW, I needed to provide the account number where the TGW is located and the ID of the TGW itself. I then needed to accept the peering request manually. I haven’t enabled auto-accept on the TGW and unless you’d happily give a set of your house keys to everyone that has access to your AWS organisation, you shouldn’t either. The potential for chaos on a grand scale is just too much of a risk to accept.

With that done, I decided to create a prefix list with VMC. That will make routing updates a little easier. A prefix list creates an aggregated list of all network routes from the VMC compute gateway and shares it with the AWS account you specify. So instead of manually adding/removing a route every time you add a new or remove an old segment on VMC, the prefix list will take care of it and ensure our transit gateway has an up to date routing table. Better still, because we’re running BGP over the VPN the routing updates will also be pushed down to the on-premises router without us having to do anything.

To finish up the VMC prefix list setup, I need to accept the resource share from VMC. I then need to create a reference in the transit gateway routing table for it. That’s it. Almost no effort to have dynamic routing everywhere.

After creating the necessary firewall rules within the SDDC, I have full connectivity to my AWS accounts and my SDDCs from on-premises via a single VPN connection. I also have bi-directional connectivity from all of my AWS accounts to my SDDC. A new segment created in the VMC console is immediately pushed to the SDDC vCenter and almost immediately pushed to the AWS and on-premises routing tables. Temper your expectations, it is BGP after all.

The networking is now complete and like all good projects, my introductory journey into VMware Cloud on AWS is experiencing some scope creep. So stay tuned for part three of my two part series, in which I’ll cover the full setup of VMware HCX from my on-premises cluster to my VMC SDDC and migrate some VMs to the cloud.