Quick Links

Part 1: Building the VCF on VxRail management cluster

Part 2: Virtual Infrastructure Workload Domain creation

Part 3: Deploy, Configure and Test VMware HCX

Part 4: Expanding Workload Domains

Following on from part 1, I’ve now got a four node VxRail cluster running all the required VCF management VMs. As I’m converting an entire VxRack, I’m not using the consolidated architecture. In that design, management and workload/production VMs are run on the same cluster. It’s meant for small environments of up to about six nodes. Anything beyond that falls into the standard architecture model. So right now, technically I can’t run any production VMs on my VCF on VxRail deployment. Enter stage 2 of the process.

In stage 2, I’ll steal another three nodes from the VxRack SDDC workload domain, decommission them, convert them to VxRail nodes and build another cluster. Except there’s a little bit more to it than that.

In an ideal automated world, I’d have finished up day one by decommissioning the nodes I need for this stage of the build and kicking off the automation to convert them. Then when I get into the office at the start of day 2, I’ve got three freshly converted VxRail nodes waiting to be built. It needn’t be only three nodes of course. If I could have freed up more than that from my VxRack SDDC workload domain, I’d have decommissioned as many as I could have realistically gotten away with. Just enough to leave the production workload running (with some overhead of course) and enough not to violate any vSAN storage policies. The more nodes I can free up and convert now, the less iterations of convert & build I need to do in the future.

Without automation converting the nodes, I’m looking at just under half of day 2 to get the three nodes where they need to be. I’m going to base the timing at the end of this post on a non-automated process.

Once I kick off the RASR reset on all three nodes, I know I’ve got some time to spend elsewhere. So I log into VxRail SDDC manager and create a VI workload domain. This is a little different than how you’d create one in VxRack SDDC world. There you’d pick nodes out of the pool, give the wizard some details and it’d build your cluster for you. In VCF on VxRail, we haven’t yet got the nodes to create the cluster with. So we more or less half create the VI workload domain and then add the cluster of nodes afterward. I’ll move on from my gross oversimplification and instead show you the process.

Log into SDDC manager, find the Workload Domain button and click it. Choose the only selectable option, ‘VI – VxRail Virtual Infrastructure Setup’.

I gave my new workload domain the imaginative name ‘WorkloadDomain2’. Next, give vCenter details.

The vCenter doesn’t exist yet of course, an empty one will be deployed by SDDC manager which you’ll build your VxRail cluster into. The vCenter DNS name I provided here was already set up on the DNS server.

Review all the details entered and click finish. The SDDC dashboard will reappear and the progress of the vCenter deployment is shown in the tasks view at the bottom of the UI. It may be hidden, there are buttons at the bottom right of the window that’ll expand or maximise the tasks view.

About 15 minutes later it was done and I had a new vCenter in my list with only a datacenter created within it. Once this process finishes, the new VI workload domain will display in the dashboard, but will show a status of activating.

It’ll continue to show this status until the VxRail cluster is added and the domain creation is completed. So I’ll get onto that next.

With the RASR reset finished up on the three nodes, I rebooted to the IDSDM and kicked off the factory reset. This is quick in comparison to the RASR reset and when it’s done, I rebooted the nodes so the automated build would kick off. While that’s in progress, I copied the switch config applied to the ports for the management nodes and also applied it to the ports for these three nodes.

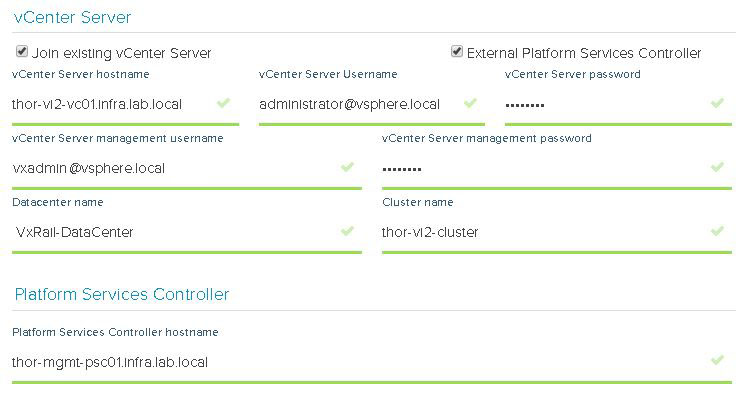

I went through my usual prep for VxRail cluster build (briefly covered that in part 1), then kicked off the install. The only difference this time is that I’ve already got a vCenter deployed for this cluster, so I choose to join an existing vCenter and use an external PSC.

As in the management cluster build, I’m also selecting ‘None’ for the logging option.

I entered all the other usual details, validation passed and started the cluster build. It finished up quite quickly and I was back into SDDC manager to complete the VI workload domain creation.

Within the workload domains menu, I chose my currently ‘activating’ WorkloadDomain2 and selected “Add VxRail Cluster” from the actions menu.

The cluster addition dialog opens up, and after a few seconds displayed the VxRail cluster I just finished building.

I entered the host password and clicked “copy to all hosts”. Probably more of a time saver if I was building a huge cluster.

Next up is NSX settings. Very self-explanatory, nothing out of the ordinary here. I entered my VXLAN VLAN ID and some IP settings for both the NSX manager and controller cluster.

Next up is NSX settings. Very self-explanatory, nothing out of the ordinary here. I entered my VXLAN VLAN ID and some IP settings for both the NSX manager and controller cluster.

Moving on to licenses, which in my case were automatically populated from those I entered right after the SDDC bringup. Within the SDDC UI, go to Administration > Licensing.

Finally, the now familiar review screen. I clicked finish and the second half of the VI workload domain creation started.

I monitored the progress in the SDDC manager tasks view. It took about 40 minutes to run the cluster addition tasks and display my new VI workload domain in SDDC manager.

I monitored the progress in the SDDC manager tasks view. It took about 40 minutes to run the cluster addition tasks and display my new VI workload domain in SDDC manager.

Logging into vCenter, I can see that my cluster is present and NSX has been deployed & configured.

Of course, the cluster is a little empty right now, containing only the NSX controllers and VxRail Manager. I’m going to change that in part 3 when I deploy HCX and run some test migrations from the VxRack SDDC.

Briefly back to one of the questions asked at the start of part 1;

- How long is it going to take? – Total so far is the best part of 2 days. Although I only used three nodes to create my first VI workload domain, I could have built it with many more. It would not have added a significant time penalty to the process of creating and finalising the workload domain. The penalty there would have been the additional time to convert the nodes in the first place.

Excellent read Ryan